There are many customize-able settings in the settings screen for item configuration defaults. The default setting follows only links posted on the root level (the page you selected). * Link depth refers to the links that the app will follow.

Like webcopier update#

You can update the content of the web page by taping the reload icon on the item.

Like webcopier download#

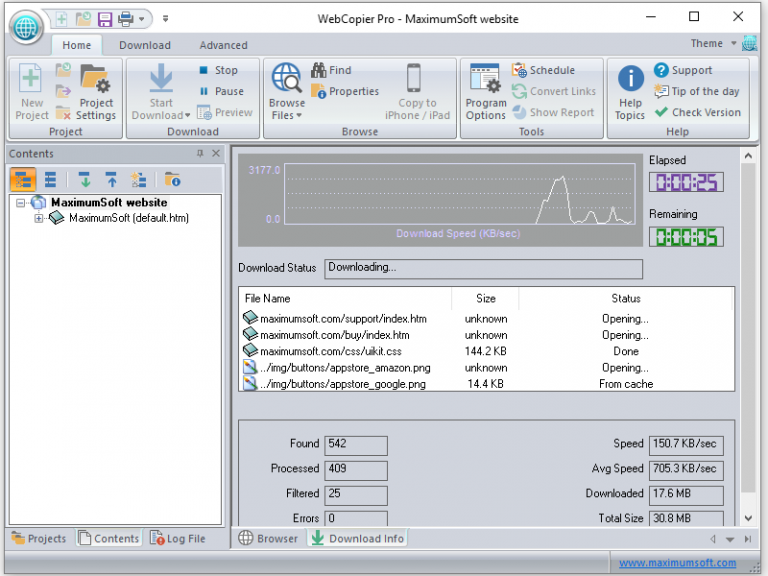

It is recommended to download when you are connected to a WiFi ( the app doesn't enforce it currently).ĥ. Download the web page: in the main app screen find the item and tap on the download icon, it will start downloading the web page. Optional(You can choose if you want to download images/pdf/scripts or not)Ĥ. You can edit the item configuration later by clicking settings icon on the item. Edit the Item configuration: set the URL & title of the web site and select the link depth and maximum number of links per page to follow* then tap the save icon (it might be hidden behind the keyboard) to save the item. Copy the link from the external browser and paste it in the WebCopierĢ - Browse to the page using the inbuilt browser in the app.ģ.

Like webcopier plus#

You could then base your exclusion on one or more criteria such as city, organisation, ISP, User-Agent, etc.1.Open WebCopier and tap on the plus (+) icon in the main interfaceġ.

It is also possible to exclude traffic through the Exclusions available in Data Management. It is up to you whether to add this range as an exclusion or monitoring. with the CNIL Exemption), we will not be able to provide you with a precise IP, but the associated range. !\ Please note that if you have IP anonymisation (e.g.

After the downloading WebCopier completed, click the. URLs (Referrer sites): a spike in visits from an unknown domain is suspicious Or, if you select Save as, you can choose where to save it, like your desktop.Time spent/ pages: if it's short, it may indicate a crawler.Using various indicators, such as the following six, you can check for this behaviour amongst your visitors: When this is the case, you can often recognize robots by their abnormal behaviour. Some, especially the more recent ones that have not yet been listed, may bypass our exclusions and cause unusual spikes in your traffic. The IAB cannot however record all robots in existence. When traffic is excluded, the exclusion is based on the IP addresses or User Agents of robots in the above list.

Here are some of the more notable ones: Download Ninja, Heritrix, Webcopier, PageNest Pro, WebZip, etc. Off-line bots are not excluded by default, but you can choose to exclude them from the same menu as above. They are excluded by default, but you can choose to include them by going to Configuration > Parameters > Monitoring/Excluding traffic > Robots. Some organisations will monitor your site's viability with bots: Microsoft System Center Operations Manager, Gomez Agent, Observer, Nagios, etc. We have certain measures in place to prevent robot traffic from polluting your data: an organisation that records all known robots provides us with a list (IAB/ABC) on which we base our traffic exclusions so that bot traffic is not included alongside your other data in the interface.

0 kommentar(er)

0 kommentar(er)